Home Stretch | Teaching computers to see things like people

TU/e researcher Bart Smets received his PhD cum laude with his dissertation in the field of automatic image processing

To enable computers to detect cracks in bridges or recognize cancer in medical images, they have to learn to look at things like people do. PhD candidate Bart Smets combined neural networks with traditional mathematical methods to develop a model that can analyze images more efficiently.

“I was almost thirty already when I started my bachelor's program,” says Smets – almost forty now – in his unmistakable Flemish accent. After high school, he went to university in Leuven (“like everyone in Belgium”), but he didn’t like it. He dropped out and started working in logistics. “Manual labor. I worked as a forklift driver, in the warehouse, in distribution – that kind of thing.”

A radical turn

With his thirtieth birthday approaching, he started to ask himself: is this what I want to do for the rest of my life? “You can still take a radical turn at the age of thirty,” says Smets. He enrolled in the Mathematics program at TU/e and now, ten years later, he’s completing his PhD (with honors) for a dissertation on automatic image processing. “I would have never imagined this ten years ago.”

It was his supervisor, TU/e researcher Remco Duits, who ignited his passion for geometry and – in particular – image processing. Smets met Duits during his bachelor’s final project, wrote his master’s thesis under his supervision, and eventually also chose him as his PhD supervisor. “I don’t see my career here as a separate bachelor’s, master’s and PhD phase, but as one uninterrupted period in which I’ve worked with Remco,” he laughs. And if it’s up to him, the collaboration will continue after his PhD. “I just applied for a position as assistant professor in his group.”

Many areas of application

Image processing is a broad field that focuses on analyzing and manipulating images. “Applications include facial recognition to unlock your phone, license plate recognition in parking lots, and all kinds of security systems involving cameras,” Smets explains.

In industry there’s a lot of demand for accurate image analysis methods as well. “When you produce complex chips, small manufacturing errors can occur. We don’t want to detect those errors manually, so we try to automate the process,” he says. Rijkswaterstaat, the executive of policies and regulations of the Dutch Ministry of Infrastructure and the Environment, can use similar technology to detect cracks in bridges.

Automatic image analysis also plays a crucial role in the medical sector, for instance when it comes to the early diagnosis of diabetes and other diseases. “Computers need to be able to accurately analyze images and correctly interpret complex information, like the way blood vessels branch off and intersect on a scan,” the PhD candidate explains.

Smets doesn’t focus on concrete applications, but on the development of technology that can be applied in various domains. “We work on improved methods for automatic image recognition that are widely applicable,” he says. “Technically, detecting a crack in a steel bridge is the same as finding a blood vessel in a retina.”

PDEs and neural networks

For automatic image processing, two important methods are used. First, you have the mathematical methods based on what are known as partial differential equations (PDEs). “These are mathematical equations that, when applied to an image, perform a well-defined operation,” Smets explains. “These equations can be simple, but you can also combine them to carry out complex image processing operations.”

“The great thing about PDEs is that you can exactly predict what will happen with an image by studying the equations, even if you’ve never seen that image before,” he continues. “This makes the mathematical method very reliable and predictable.”

Another important method is based on neural networks that are trained using large amounts of data. “The advantage of neural networks is that it’s relatively easy to have them carry out very complex tasks like object recognition,” says Smets. By training the model with thousands of pictures, you can teach it to recognize all pictures of a dog or car, for example. “But a disadvantage of this is that it requires a lot of high-quality data, which isn’t always available in, say, the medical sector.”

Neural networks also tend to be very big and require a lot of computing power and energy. “What’s more, as neural networks are black box models, you never know exactly how they reach a certain conclusion, which makes it difficult to assess the reliability of the results.”

Equivariance

In his research, Smets combined the advantages of both methods and developed a new technology: PDE-G-CNN. This method replaces certain operations in the neural network by PDEs. “The idea is that this combined model performs better than the separate methods,” Smets explains.

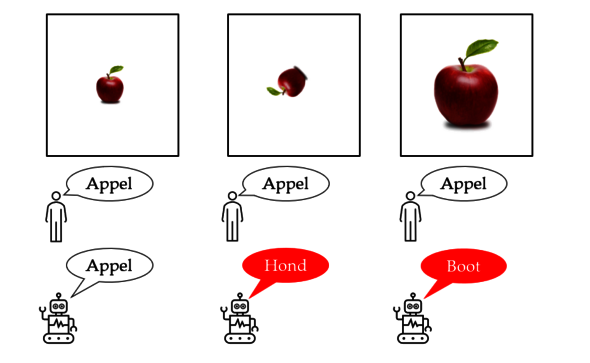

An important problem in image processing is the principle of equivariance. This means that when you transform an image, for instance by moving, rotating, or scaling it, the output must change in a consistent way. Smets shows a series of photos of the same apple. In the second photo, the apple has been rotated, and in the third one it has been enlarged. People immediately recognize it’s the same apple, but for a neural network things aren’t that obvious. “If the model has only seen photos of upright apples, it won’t recognize the rotated apple as such,” says Smets.

Smets integrated PDEs into his model to teach the neural network to deal with these kinds of transformations. “We had to decide what equations we would use and how we would integrate them into the network. By doing that, we can deliver mathematical proof that the network will react in a certain way.”

Better performance, fewer parameters

Smets tested his model and compared it to existing neural networks. The accuracy of the results was slightly better, but the main advantage was the number of parameters that were required. “The parameters are like the network’s dials that you need to turn to optimize it,” Smets explains. “It goes without saying it’s easier to set 3,000 parameters than 300,000.”

With the new method, the same results can be achieved using considerably fewer parameters, which greatly improves the model’s efficiency. “The accuracy is the same, but we get there in a much more efficient way,” says Smets.

In addition, the interpretability of the results has improved, which is very important in self-driving cars and other sensitive applications. “If you manage to unlock your phone 99% of the time, that’s fine, but a car that only stops at a red light 99% of the time is unacceptable,” says Smets. “For those kinds of applications, you have to give hard guarantees for how the system will function in unfamiliar situations.” The PDE-G-CNN-model is a step in the right direction because it can offer better guarantees, thanks to the integrated PDEs that provide more insight into the functioning of the network functions and the significance of results.

PhD in the picture

What is that on the cover of your dissertation?

“The patterns on the ceiling of the Wells Cathedral in Engeland, an example of the beautiful geometry in classical cathedrals. In addition, the cover shows a transition from a detailed to a simplified image; that’s also something we do with PDEs.”

You’re at a birthday party. How do you explain your research in one sentence?

“If I want to use my sense of humor, I say ‘this is a pen (he holds up a pen, ed.), but this is also a pen (he turns the pen, ed.)’. This may be obvious to us, but to a computer it’s not, so I develop mathematical models to teach computers to deal with this.”

How do you blow off steam outside of your research?

“I’m a gearhead, so if I want to blow of steam, I drive my car around a racetrack.”

What tip would you have liked to receive as a beginning PhD candidate?

“With Remco and my co-supervisor, Jim Portegies, I make a very good team. This makes the whole experience very nice and smooth. I think that’s the key to a good PhD.”

What is your next step?

“During my PhD, I set up a course that I taught to master’s students for two years. I loved that and would like to continue doing it. So I’m hoping I can stay at TU/e as an assistant professor, continue my research, and improve the methods by implementing the lessons learned.”

Discussion